<Back to Index>

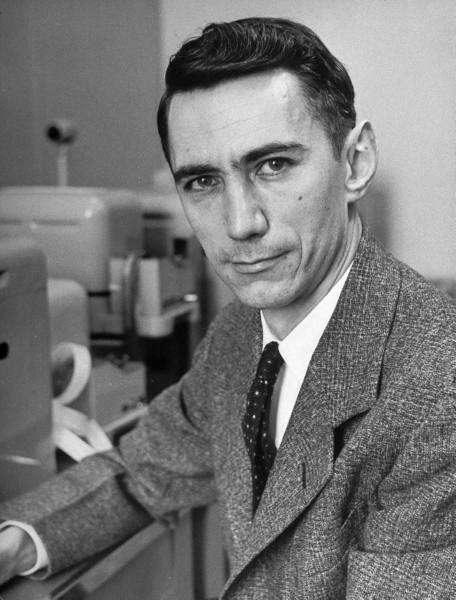

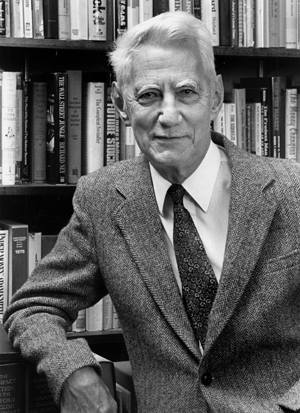

- Mathematician Claude Elwood Shannon, 1916

- Poet Juhan Liiv, 1864

- Minister of Foreign Affairs Ulrich Friedrich Wilhelm Joachim von Ribbentrop, 1893

PAGE SPONSOR

Claude Elwood Shannon (April 30, 1916 – February 24, 2001), an American mathematician and electronic engineer, is known as "the father of information theory".

Shannon is famous for having founded information theory with one landmark paper published in 1948. But he is also credited with founding both digital computer and digital circuit design theory in 1937, when, as a 21-year-old master's student at MIT, he wrote a thesis demonstrating that electrical application of Boolean algebra could construct and resolve any logical, numerical relationship. It has been claimed that this was the most important master's thesis of all time.

Shannon

was

born in Petoskey,

Michigan. His father, Claude Sr (1862 – 1934), a descendant of early New

Jersey settlers,

was a businessman and for a while, Judge of Probate.

His

mother, Mabel Wolf Shannon (1890 – 1945), daughter of German

immigrants, was a language teacher and for a number of years principal

of Gaylord

High

School, Michigan. The first sixteen years of Shannon's life

were spent in Gaylord,

Michigan, where he attended public school, graduating from Gaylord

High School in 1932. Shannon showed an inclination towards mechanical

things. His best subjects were science and mathematics, and at home he

constructed such devices as models of planes, a radio-controlled model

boat and a telegraph system to a friend's house

half a mile away. While growing up, he worked as a messenger for Western

Union. His childhood hero was Thomas

Edison, who he later learned was a distant cousin. Both were

descendants of John

Ogden, a colonial leader and an ancestor of many distinguished

people. In 1932

he entered the University

of

Michigan, where he took a course that introduced him to the

works of George

Boole. He graduated in 1936 with two bachelor's

degrees, one in electrical

engineering and one

in mathematics,

then

began graduate study at the Massachusetts

Institute

of Technology (MIT),

where

he worked on Vannevar

Bush's differential

analyzer, ananalog

computer. While

studying the complicated ad hoc circuits of the differential analyzer,

Shannon saw that Boole's concepts could be used to great utility. A

paper drawn from his 1937 master's thesis, A

Symbolic

Analysis of Relay and Switching Circuits,

was

published in the 1938 issue of the Transactions

of

the American Institute of Electrical Engineers. It also

earned Shannon the Alfred Noble

American Institute of American Engineers Award in 1940. Howard

Gardner, of Harvard

University, called Shannon's thesis "possibly the most important,

and also the most famous, master's thesis of the century." Victor

Shestakov, at Moscow State University, had proposed a theory of

electric switches based on Boolean logic a little bit earlier than

Shannon, in 1935, but the first publication of Shestakov's result took

place in 1941, after the publication of Shannon's thesis. In this

work, Shannon proved that Boolean

algebra and binary

arithmetic could be

used to simplify the arrangement of the electromechanical relays then used in telephone

routing switches, then turned the concept upside down and also proved

that it should be possible to use arrangements of relays to solve

Boolean algebra problems. Exploiting this property of electrical

switches to do logic is the basic concept that underlies all electronic

digital computers. Shannon's work became the foundation of practical digital

circuit design when

it became widely known among the electrical engineering community

during and after World

War

II. The theoretical rigor of Shannon's work

completely replaced the ad

hoc methods that

had previously prevailed. Flush

with this success, Vannevar Bush suggested that Shannon work on his

dissertation at Cold

Spring

Harbor Laboratory, funded by the Carnegie Institution headed

by Bush, to develop similar mathematical relationships for Mendelian genetics,

which resulted in Shannon's 1940 PhD thesis at MIT, An

Algebra

for Theoretical Genetics. In 1940,

Shannon became a National Research Fellow at the Institute

for

Advanced Study in

Princeton, New Jersey. At Princeton, Shannon had the opportunity to

discuss his ideas with influential scientists and mathematicians such as Hermann

Weyl and John

von

Neumann, and even had the occasional encounter with Albert

Einstein. Shannon worked freely across disciplines, and began to

shape the ideas that would become information theory. Shannon

then joined Bell

Labs to work on fire-control

systems and cryptography during World War II, under

a contract with section D-2 (Control Systems section) of the National

Defense Research Committee (NDRC). For two

months early in 1943, Shannon came into contact with the leading

British cryptanalyst and mathematician Alan

Turing. Turing had been posted to Washington to share with the US

Navy's cryptanalytic service the methods used by the British Government

Code and Cypher School at Bletchley

Park to break the

ciphers used by the German U-boats in the North Atlantic. He was also interested in

the encipherment of speech and to this end spent time at Bell Labs.

Shannon and Turing met every day at teatime in the cafeteria. Turing showed Shannon his

seminal 1936 paper that defined what is now known as the "Universal

Turing

machine" which impressed him, as

many of its ideas were complementary to his own. In 1945,

as the war was coming to an end, the NDRC was issuing a summary of

technical reports as a last step prior to its eventual closing down.

Inside the volume on fire control a special essay titled Data Smoothing and

Prediction in Fire-Control Systems, coauthored by Shannon, Ralph

Beebe

Blackman, and Hendrik

Wade

Bode, formally treated the problem of smoothing the data in

fire-control by analogy with "the problem of separating a signal from

interfering noise in communications systems." In other words it modeled

the problem in terms of data and signal

processing and thus

heralded the coming of the information age. His work

on cryptography was even more closely related to his later publications

on communication

theory. At the close of the war, he

prepared a classified memorandum for Bell Telephone Labs entitled "A

Mathematical Theory of Cryptography," dated September, 1945. A

declassified version of this paper was subsequently published in 1949

as "Communication

Theory

of Secrecy Systems" in the Bell

System

Technical Journal. This paper incorporated many of the

concepts and mathematical formulations that also appeared in his A

Mathematical

Theory of Communication. Shannon said that his

wartime insights into communication theory and cryptography developed

simultaneously and "they were so close together you couldn’t separate

them". In a footnote near the

beginning of the classified report, Shannon announced his intention to

"develop these results ... in a forthcoming memorandum on the

transmission of information." In 1948

the promised memorandum appeared as "A Mathematical Theory of

Communication", an article in two parts in the July and October issues

of the Bell System

Technical Journal. This work focuses on the problem of how best to

encode the information a sender wants to transmit.

In this fundamental work he used tools in probability theory, developed

by Norbert

Wiener, which were in their nascent stages of being applied to

communication theory at that time. Shannon developed information entropy as a

measure for the uncertainty in a message while essentially inventing

the field of information

theory. The book,

co-authored with Warren

Weaver, The

Mathematical Theory of Communication, reprints Shannon's 1948

article and Weaver's popularization of it, which is accessible to the

non-specialist. Shannon's concepts were also popularized, subject to

his own proofreading, in John

Robinson

Pierce's Symbols,

Signals,

and Noise. Information

theory's

fundamental contribution to natural

language

processing and computational

linguistics was

further established in 1951, in his article "Prediction and Entropy of

Printed English", proving that treating whitespace as the 27th letter of the

alphabet actually lowers uncertainty in written language, providing a

clear quantifiable link between cultural practice and probabilistic

cognition. Another

notable paper published in 1949 is "Communication

Theory

of Secrecy Systems", a declassified version of his wartime work on the mathematical theory of cryptography,

in

which he proved that all theoretically unbreakable ciphers must have the same requirements as the one-time

pad. He is also credited with the introduction of sampling

theory, which is concerned with representing a continuous-time

signal from a (uniform) discrete set of samples. This theory was

essential in enabling telecommunications to move from analog to digital

transmissions systems in the 1960s and later. He

returned to MIT to hold an endowed chair in 1956. Outside

of his academic pursuits, Shannon was interested in juggling, unicycling,

and chess.

He

also invented many devices, including rocket-powered flying

discs, a motorized pogo

stick, and a flame-throwing trumpet for a science exhibition. One of his more humorous devices was a box kept

on his desk called the "Ultimate Machine", based on an idea by Marvin

Minsky. Otherwise featureless, the box possessed a single switch on

its side. When the switch was flipped, the lid of the box opened and a

mechanical hand reached out, flipped off the switch, then retracted

back inside the box. Renewed interest in the "Ultimate Machine" has

emerged on YouTube and Thingiverse.

In

addition he built a device that could solve the Rubik's

cube puzzle. He is

also considered the co-inventor of the first wearable

computer along with Edward

O.

Thorp. The device was used to

improve the odds when playing roulette. Shannon

came to MIT in 1956 to join its faculty and to conduct work in the Research

Laboratory

of Electronics (RLE).

He

continued to serve on the MIT faculty until 1978. To commemorate his

achievements, there were celebrations of his work in 2001, and there

are currently five statues of Shannon: one at the University

of

Michigan; one at MIT in the Laboratory

for

Information and Decision Systems; one in Gaylord, Michigan; one

at the University

of

California, San Diego; and another at Bell Labs. After the breakup of the Bell system, the

part of Bell Labs that remained with AT&T was named Shannon Labs in

his honor. Robert

Gallager has called

Shannon the greatest scientist of the 20th century. According to Neil

Sloane, an AT&T

Fellow who

co-edited Shannon's large collection of papers in 1993, the perspective

introduced by Shannon's communication

theory (now called information

theory) is the foundation of the digital revolution, and every

device containing a microprocessor or microcontroller is a conceptual descendant

of Shannon's 1948 publication: "He's one of the great men

of the century. Without him, none of the things we know today would

exist. The whole digital

revolution started

with him." Shannon

developed Alzheimer's

disease, and spent his last few years in a Massachusetts nursing

home. He was survived by his wife, Mary Elizabeth Moore Shannon; a son,

Andrew Moore Shannon; a daughter, Margarita Shannon; a sister,

Catherine S. Kay; and two granddaughters. Shannon

was oblivious to the marvels of the digital revolution because his mind

was ravaged by Alzheimer's

disease. His wife mentioned in his obituary that had it not been

for Alzheimer's "he would have been bemused" by it all.

Theseus,

created

in 1950, was a magnetic mouse controlled by a relay circuit

that enabled it to move around a maze of 25 squares. Its dimensions

were the same as an average mouse. The maze configuration was

flexible and it could be modified at will. The mouse was designed to

search through the corridors until it found the target. Having

travelled through the maze, the mouse would then be placed anywhere it

had been before and because of its prior experience it could go

directly to the target. If placed in unfamiliar territory, it was

programmed to search until it reached a known location and then it

would proceed to the target, adding the new knowledge to its memory

thus learning. Shannon's mouse appears to

have been the first learning device of its kind. In 1950

Shannon published a groundbreaking paper on computer

chess entitled Programming

a

Computer for Playing Chess. It describes how a machine or

computer could be made to play a reasonable game of chess.

His

process for having the computer decide on which move to make is a minimax procedure, based on an evaluation

function of a given

chess position. Shannon gave a rough example of an evaluation function

in which the value of the black position was subtracted from that of

the white position. Material was counted according to

the usual relative chess

piece

relative value (1

point for a pawn, 3 points for a knight or bishop, 5 points for a rook,

and 9 points for a queen). He considered some

positional factors, subtracting ½ point for each doubled

pawns, backward

pawn, and isolated

pawn. Another positional factor in the evaluation function was mobility, adding

0.1 point for each legal move available. Finally, he considered checkmate to be the capture of the

king, and gave the king the artificial value of 200 points. Quoting

from the paper: The

evaluation function is clearly for illustrative purposes, as Shannon

stated. For example, according to the function, pawns that are doubled

as well as isolated would have no value at all, which is clearly

unrealistic.

Shannon

and

his wife Betty also used to go on weekends to Las

Vegas with M.I.T. mathematician Ed

Thorp, and made very successful forays in blackjack using game

theory type methods

co-developed with fellow Bell Labs associate, physicist John

L. Kelly Jr. based

on principles of information theory. They made a fortune, as

detailed in the book Fortune's

Formula by William Poundstone and

corroborated by the writings of Elwyn

Berlekamp, Kelly's research assistant

in 1960 and 1962. Shannon and Thorp also

applied the same theory, later known as the Kelly

criterion, to the stock market with even better results.

Shannon

formulated

a version of Kerckhoffs'

principle as "the

enemy knows the system". In this form it is known as "Shannon's maxim".